The rise of Artificial Intelligence (AI) has revolutionized the way we interact with technology, ushering in an era of automation and efficiency. From chatbots to self-driving cars, AI is becoming increasingly ubiquitous. However, as AI becomes more sophisticated, so does the potential for malicious use. AI `can be leveraged to spread disinformation, discriminate against marginalized groups, and perpetuate harmful stereotypes. To combat these issues, researchers have pioneered a new field of AI, which seeks to detect and neutralize misuse of AI systems.

In this article, we’ll explore what “AI vs AI” means and the various AI-detecting AI apps and software that have been developed to detect and mitigate harmful AI. “AI vs AI” refers to the process of leveraging AI to detect and neutralize other harmful uses of AI systems. Essentially, it’s a way of fighting iron with iron.

The idea is that if we can develop AI that is advanced enough to detect and mitigate the harmful effects of other AI, we can prevent AI from being used to spread disinformation, discriminate against marginalized groups, and perpetuate harmful stereotypes.

There are several key methods by which AI can be used to neutralize other AI. For instance, it can be utilized to detect DeepFakes, which are edited images or videos that may be conveying false information. Deepfakes can be used to spread disinformation or defame individuals. However, AI can be employed to detect these DeepFakes and prevent them from being disseminated. Similarly, AI can be utilized to detect bias in other AI systems. Overall, “AI vs AI” aims to promote a more ethical and equitable use of AI. Several key AI-detecting AI apps and software have been developed to detect and mitigate harmful AI. Let’s explore some of them in detail.

GPTZero

GPTZero is a software that can detect whether a text is written by a human or by an artificial intelligence (AI) tool such as ChatGPT. ChatGPT is an AI-powered chatbot that can generate realistic and coherent texts in response to any prompt.

GPTZero was developed by Edward Tian, a Princeton University student, GPTZero works by analyzing the text with a series of complex and deep algorithms that measure metrics such as perplexity and burstiness. Perplexity measures how complex the text is, while burstiness measures how randomly it is written. Based on these metrics, GPTZero can assign a score to the text and highlight the portions that are likely generated by ChatGPT.

It can detect AI-generated text in all languages and has a high accuracy rate of over 98%. GPTZero also provides an API for organizations that want to integrate it into their systems.

Hugging Face

Hugging Face is a natural language processing (NLP) startup that has developed a popular open-source library for NLP called Transformers. One of the key features of the Hugging Face library is its AI content detection ability, which allows it to analyze text and classify it based on its content.

Hugging Face’s AI content detection identifies if a piece of content was created by AI or not by analyzing its linguistics, style and tone, readability metrics, cohesion and structure, contextual keywords and SEO presence. Hugging Face’s AI content detection is powered by a deep learning model that has been pre-trained on a large corpus of text data.

The model can classify text into various categories such as positive/negative sentiment, toxic/non-toxic content, and offensive/non-offensive language. The system can also compare the text against known AI generators and evaluate the results returned from reverse image searches if there are any attached media files. If it seems that a website has been fully automated without any actual human input, then chances are high that it was likely created using AI technology. Additionally, when monitoring large volumes of websites for updates, the algorithms can quickly spot changes in the templates which were most probably created using AI software since it allows them to replicate themselves much faster than manual operations.

Overall, Hugging Face’s AI content detection ability is a valuable tool for analyzing and classifying text data, and it has the potential to improve the efficiency and accuracy of a wide range of NLP applications.

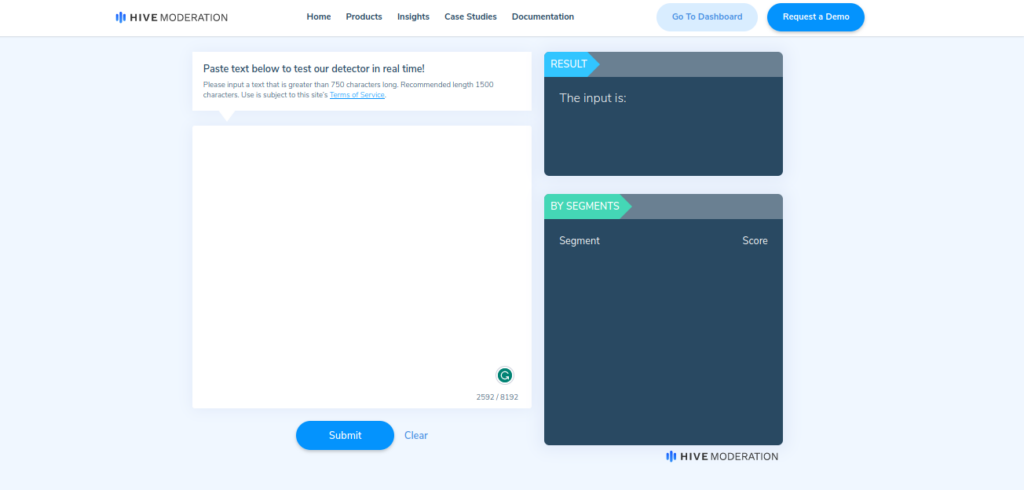

Hive Moderation

Hive is an American artificial intelligence company offering deep learning models via APIs to enterprise customers. Hive operates a distributed microtask platform to collect the training data that feeds into its models.

The company uses natural language processing and machine learning algorithms to detect whether a piece of content is human-authored or originates from artificial intelligence systems. This capability provides crucial protection against nefarious actors who might try to exploit AI-driven text generation programs to deceive unsuspecting readers.

To achieve optimum accuracy, Hive Moderation draws upon a rich corpus of synthetic and genuine-world data samples while developing its AI models. Training AI using massive databases ensures reliable classification whenever clients run any fresh content via Hive Moderation’s system.

For organizations concerned about AI-generated malicious material seeping into their platforms, Hive Moderation offers tailor-made plans for enhanced security. Specifically designed packages include a combination of rule-based systems combined with ML and NLP technologies to deliver robust protection around the clock.

Copyleaks

CopyLeaks is an open-source web scraper toolkit designed for extracting information from HTML documents. One of the key features of CopyLeaks is its ability to detect and filter out copied content created by artificial intelligence.

CopyLeaks utilizes advanced machine learning techniques to recognize patterns and characteristics commonly associated with AI-generated text. By analyzing elements such as word choice, sentence length, grammar, and readability level, CopyLeaks can accurately distinguish between copywritten material produced by humans versus AI.

Additionally, CopyLeaks employs string similarity algorithms to determine if a given block of text matches known AI-generated patterns. With its comprehensive suite of detection capabilities, users can rely on CopyLeaks to ensure that only original content sourced from reputable sources enters their databases.

The platform also enables customization options via configuration files tailored to individual use case scenarios. Users may adjust settings according to industry requirements or preferences to enhance the precision of AI copying detection.

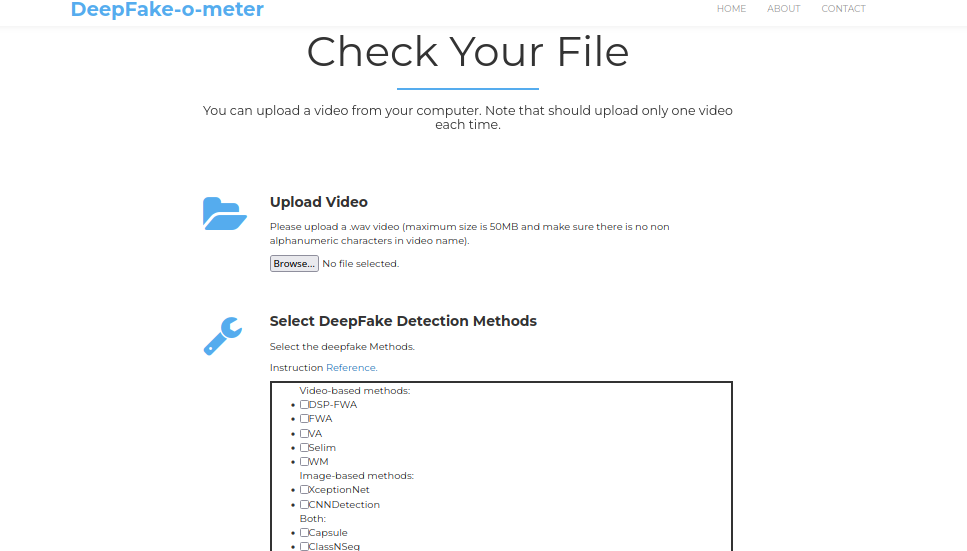

DeepFake-O-Meter

DeepFake-O-Meter (DFOM) is an open-source software project which enables individuals to quickly verify if a video clip contains synthesized visual components. Using machine learning and computer vision, DFOM analyzes facial expressions and movements to determine whether they are authentic or recreated through artificial intelligence.

Developed in collaboration with the State Department’s Global Engagement Center and funded by Facebook, OpenAI, and others, DFOM became publicly available in early May 2021. Since then, thousands of users have downloaded DFOM from GitHub.

When analyzing a video through DFOM, the program measures how much motion occurred over time within sub-regions of each frame. DFOM analyzes videos based on subtle facial cues, lip sync anomalies, and sudden changes in expression. By mining large datasets of known deep fakes versus authentic footage, it then trains algorithms to spot telltale signs of manipulation. Typical faces contain significant movement; therefore, face sub-regions having consistent motion between frames tend to indicate that those regions have been deepfaked.

According to the developers’ demo page, when tested on numerous celebrity and public figure impersonations, the tool scored high marks in reliably flagging deep fakes with near human levels of performance, even besting certain benchmarks.

Sensity

Sensity.ai is an AI company that focuses on detecting and analyzing AI-generated content, including DeepFakes. DeepFakes can be used to spread misinformation, manipulate public opinion, and damage reputations. Sensity.ai has developed state-of-the-art software that uses advanced machine learning algorithms to identify DeepFakes in videos, images, and audio recordings. Their technology can help prevent the spread of DeepFakes and protect individuals and organizations from potential harm. By detecting and categorizing DeepFakes, Sensity.ai is at the forefront of preserving the integrity of information and ensuring trust in media sources.

Conclusion

As AI becomes more ubiquitous in our daily lives, the potential for its malicious use also increases. However, the field of “AI vs AI” offers a promising solution to this problem. By leveraging AI to detect and mitigate the harmful effects of other AI systems, we can promote a more ethical and equitable use of AI. With the development of AI-detecting AI apps and software, we have the tools to combat the potential misuse of AI and ensure that it is used for the betterment of society.